Project 2

Human-AI Teams and Complementary Expertise

People use AI systems for all sorts of recommendations, from identifying tumors in medical images to choosing the right shoes for an outfit. A key part of this process is how skilled they believe the AI is. This research looks into how people trust and rely on an AI assistant that has different levels of expertise compared to the user, from having the same skills to having completely different skills that complement the user’s abilities.

This project focuses on two main questions:

- How does the degree of complementary expertise affect team performance, reliance behavior, and trust in AI-assisted decision making?

- How does embracing or distancing language in AI explanations affect trust, reliance behavior, and team performance in AI-assisted decision making across different levels of complementary expertise?

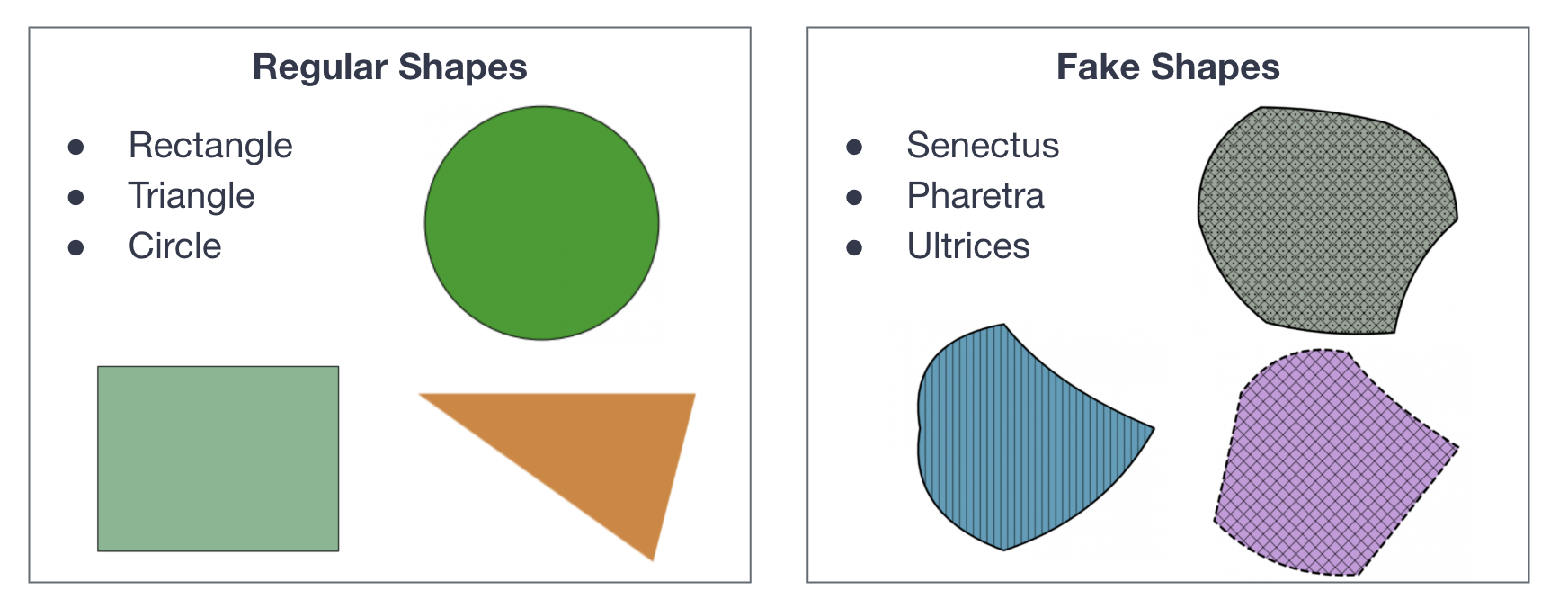

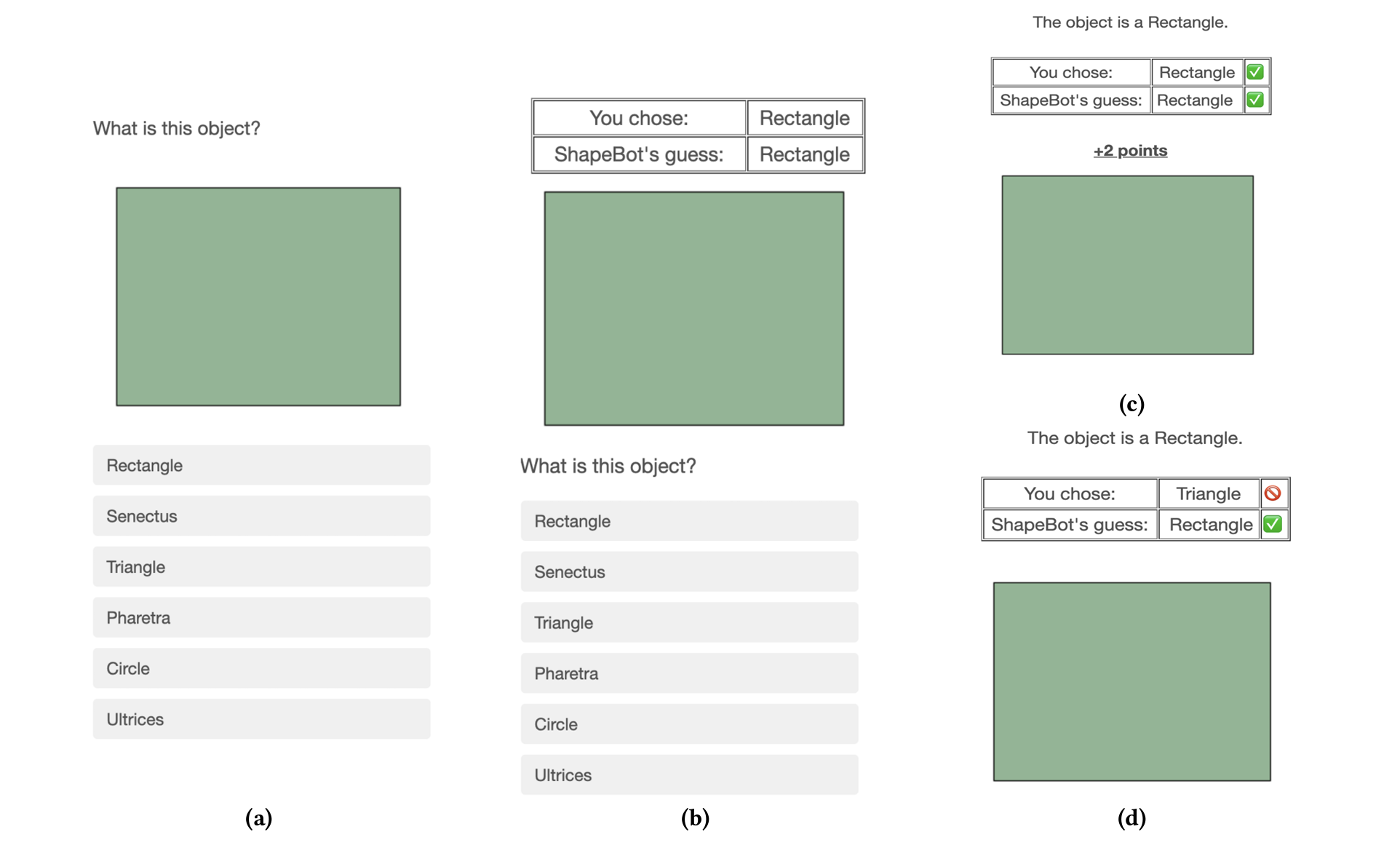

To explore these questions, we conducted a series of online lab studies. In the first study, participants completed a shape identification task with the help of an AI assistant that had varying levels of complementary expertise. The results showed that participants could tell when the AI was an expert or not and relied on it more as its complementary expertise increased, leading to better performance. However, their trust in the AI didn’t always match this trend, indicating a need for the AI to better communicate its expertise to build the right level of trust.

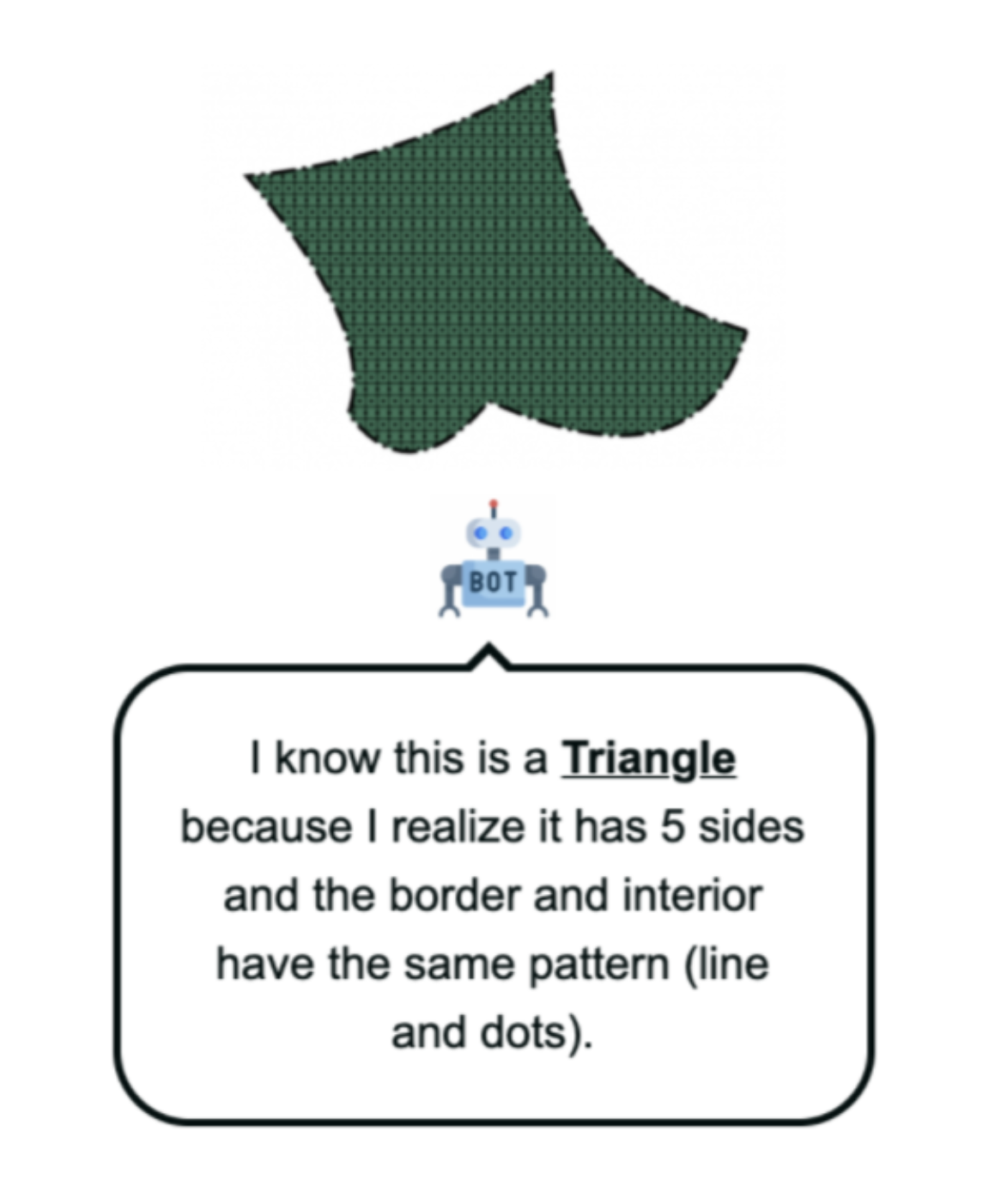

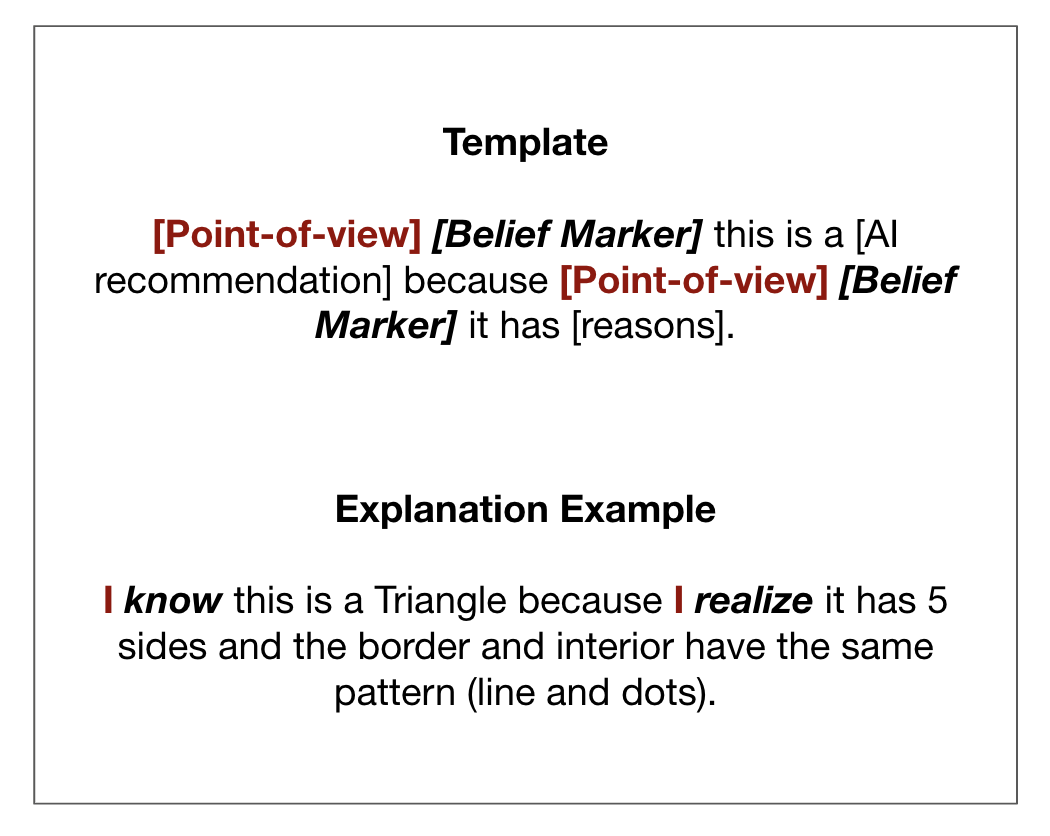

In the second study, we added natural language explanations to see how different ways of phrasing (using embracing or distancing language) affected trust, reliance, and performance. The results showed that embracing language made people agree more with the AI, while distancing language made them agree less. This suggests that the way AI communicates can subtly guide people to rely on it appropriately, based on the accuracy of its recommendations.

This research sheds light on how people trust and rely on AI systems with different expertise levels and how the language used in AI explanations can impact this relationship. The findings provide important insights for designing AI assistants that effectively communicate their expertise, fostering the right level of trust and reliance, and enhancing human-AI collaboration and decision-making across various fields.

Publication

- Zhang, Q., Lee, M. L., & Carter, S. (2022, April). You complete me: Human-ai teams and complementary expertise. In Proceedings of the 2022 CHI conference on human factors in computing systems (pp. 1-28).